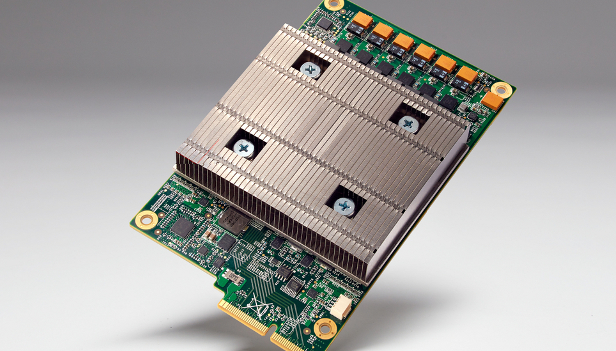

Forget the CPU, GPU, and FPGA, Google says its Tensor Processing Unit, or TPU, advances machine learning capability by a factor of three generations.

“TPUs deliver an order of magnitude higher performance per watt than all commercially available GPUs and FPGA,” said Google CEO Sundar Pichai during the company’s I/O developer conference yesterday.

TPUs have been a closely guarded secret of Google, but Pichai said the chips powered the AlphaGo computer that beat Lee Sedol, the world champion in the incredibly complicated game, Go.

Pichai didn’t go into details of the Tensor Processing Unit but the company did disclose a little more information in a blog posted on the same day as Pichai’s revelation.

“We’ve been running TPUs inside our data centers for more than a year, and have found them to deliver an order of magnitude better-optimised performance per watt for machine learning. This is roughly equivalent to fast-forwarding technology about seven years into the future (three generations of Moore’s Law),” the blog said. “TPU is tailored to machine learning applications, allowing the chip to be more tolerant of reduced computational precision, which means it requires fewer transistors per operation. Because of this, we can squeeze more operations per second into the silicon, use more sophisticated and powerful machine learning models, and apply these models more quickly, so users get more intelligent results more rapidly.”

The tiny TPU can fit into a hard drive slot within the data center rack and has already been powering RankBrain and Street View, the blog said.

Don’t give up on the GPU or CPU

What isn’t known is what exactly the TPU is. SGI had a commercial product called a Tensor Processing Unit in its workstations in the early 2000s that appears to have been a Digital Signal Processor, or DSP. A DSP is a dedicated chip that does a repetitive, simple task extremely quickly and efficiently. But according to Google there’s no connection.

Analyst Patrick Moorhead of Moore Insights & Strategy, who attended the I/O developer conference, said, from what little Google has revealed about the TPU, he doesn’t think the company is about to abandon traditional CPUs and GPUs just yet.

“It’s not doing the teaching or learning,” he said of the TPU. “It’s doing the production or playback.”

Moorhead said he believes the TPU could be a form of chip that implements the machine learning algorithms that are crafted using more power hungry GPUs and CPUs.

As to Google’s claim that the TPU’s performance is akin to accelerating Moore’s Law by seven years, he doesn’t doubt it. He sees it as similar to the relationship between a traditional ASIC (application-specific integrated circuit) and a CPU.

ASICs are hard-coded, highly optimised chips that do one thing really well. They can’t be changed like an FPGA but offer huge performance benefits. He likened the comparison to decoding an H.265 video stream with a CPU versus an ASIC built for that task. A CPU without dedicated circuits would consume far more power than the ASIC at that job.

One issue with ASICs, though, is their cost and their permanent nature, he said. The only way to change the algorithm, if required by a bug or improvement, is to make a new chip. There’s no reprogramming. That’s why ASICs have been traditionally been relegated to entities with unlimited budgets, like governments.

IDG News Service

Subscribers 0

Fans 0

Followers 0

Followers