The end of architecture

Over the years I have, somehow, managed to interview a lot of interesting characters from the tech world such as Jef Raskin and Bruce Horn from the original Mac team at Apple and free software pioneers Bruce Perens and Richard Stallman. Speaking to these lminaries is interesting because it affords an opportunity to hear the history of computing from the people who were there doing the actual work.

One of the greatest pleasures of my career was interviewing Steve Furber and Sophie Wilson, designers of, among other things, the BBC Microcomputer – one of those ‘other things’ being the Arm CPU architecture.

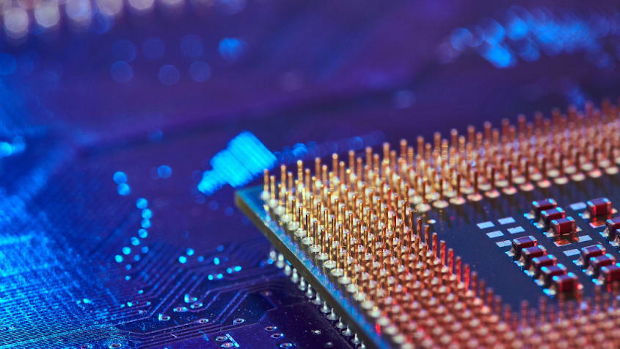

The story is a great one, not only because Arm-derived CPUs now dominate the vast low-power market, from smartphones to all manner of industrial devices, but because it is a tale of an underdog: a scrappy team of upstarts develops an architecture that, initially, only finds niche uses, but ends up going to dominante computer hardware. By 2021, 6.7 billion Arm CPUs had been produced by a dizzying array of manufacturers.

Better still, it comes with a good yarn attached When testing the prototype, Furber and Wilson found it worked without being wired into the power. It turned out that the CPU design was so efficient that it was able to draw its entire power need from leakage from the logic circuits. If it isn’t now obvious why Arm came to dominate mobile computing, perhaps it should be.

But Arm chips are not just found in phones, cars and washing machines. In 2020, Apple adopted Arm CPUs for its Macintosh computers, having been using them in the iPhone from day one.

In order to do this, Apple has had to unleash emulation in order to ensure software developed for x86-based Macs still ran. As it happens it gave us emulation so good that, in some cases, it runs code faster than does the native silicon it was designed for. Not quite a death blow to x86, but a blow to the head at least.

The company has some experience with this: Apple has built emulation into the Macintosh operating system before, first seamlessly emulating Motorola 68000 (68k) series on PowerPC-based systems, then doing the same for PowerPC on x86. Moreover, today’s macOS is a direct descendant of NextStep, developed by the late Steve Jobs’s other computer company Next Inc., and Next successfully ported NextStep from its original 68k roots to the x86, Sparc and HP’s PA-RISC architectures, so developing for different architectures is clearly in its DNA.

Emulation antics

This, I think, is where things get interesting. Processor wars have dominated personal computing since its very beginnings: the eight-bit battle was between the MOS Technologies’ 6502 and the Zilog Z80, and this was followed in the 16- and 32-bit era with, first, the faceoff between Motorola’s 68k and Intel’s x86, and then between the victorious x86 and sundry reduced instruction set computing (RISC) chips including Apple, Motorola and IBM’s PowerPC, Alpha from DEC and MIPS Technologies’ eponymous MIPS CPUs.

Today, things are beginning to change and Apple’s emulation antics are only a small part of the picture. The cloud, virtualisation and containerisation are all moves toward further abstraction away from bare metal, but

This week, Bhumik Patel, Arm’s director for software ecosystem development posted on the company’s blog, noting that he believes the future is one of heterogeneous multi-architectures in which software will have to run across them all.

“The key to enabling a robust software ecosystem is to strive towards a frictionless development experience for a wide array of developers to achieve multi-architecture support for their software. This involves enabling easy platform access to write code, test functionality, and perform required optimisations,” he wrote.

Patel was clear that behind this was a growth in cloud and edge computing deployments, which certainly reflects the reality of development today.

It is also another indication, however, that, even on the desktop hardware will soon matter less than interoperability. What starts on the servers will make its way down. After all, why should anyone care about esoteric matters such as CPU architectures as long as they get the processing power they need?

This has not happened yet, but, in truth, thus far Windows on Arm has struggled, albeit not really for technical reasons. Instead, a lack of focus on the architecture, including having no emulation capability until Windows 11, has meant neither software developers nor CPU manufacturers have had much incentive to focus on pushing on the laptop or desktop fronts. Expect this to change, pronto.

Silicon does matter, and the Arm architecture is amazing, but it is increasingly obvious that the future is virtualised, abstracted and software-defined. In a way it’s sad for those of us with an interest in the bare metal, as the effects of the passage of time always are. But it is probably for the best.

The CPU wars are not quite over yet: Intel is investing heavily and has just unveiled what it says is the world’s fastest desktop CPU, and meanwhile AMD and Nvidia were already riding high. For the decades to come, though, the computer as an appliance, or even as a service, really does seem to be the direction of travel.

Subscribers 0

Fans 0

Followers 0

Followers