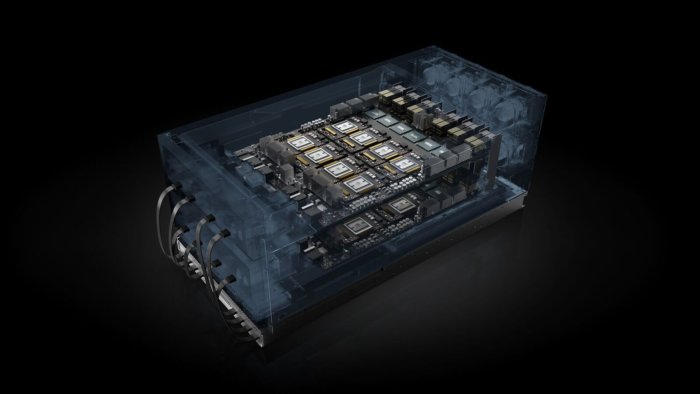

Nvidia is refining its pitch for data-centre performance and efficiency with a new server platform, the HGX-2, designed to harness the power of 16 Tesla V100 Tensor Core GPUs to satisfy requirements for both AI and high-performance computing (HPC) workloads.

Data-centre server makers Lenovo, Supermicro, Wiwynn and QCT said they would ship HGX-2 systems by the end of the year. Some of the biggest customers for HGX-2 systems are likely to be hyperscale providers, so it’s no surprise that Foxconn, Inventec, Quanta and Wistron are also expected to manufacture servers that use the new platform for cloud data centres.

The HGX-2 is built using two GPU baseboards that link the Tesla GPUs via NVSwitch interconnect fabric. The HGX-2 baseboards handle 8 processors each, for a total of 16 GPUs. The HGX-1, announced a year ago, handled only 8 GPUs.

Nvidia describes the HGX-2 as a “building block” around which servers makers can build systems that can be tuned to different tasks. It is the same systems platform on which Nvidia’s own, upcoming DGX-2 is based. The news here is that the company is making the platform available to server makers along with a reference architecture so that systems can ship by the end of the year.

Nvidia CEO Jensen Huang made the announcement at the company’s GPU Technology Conference in Taiwan.

Nvidia said two months ago at the San Jose iteration of its tech conference that the DGX-2, expected to be the first HGX-2-based system to ship, will be able to deliver two petaflops of computing power — performance usually associated with hundreds of clustered servers. DGX-2 systems start at $399,000 (€343,250).

Nvidia said HGX-2 test systems have achieved record AI training speeds of 15,500 images per second on the ResNet-50 training benchmark, and can replace up to 300 CPU-only servers that altogether would cost millions of dollars.

GPUs have found a niche in training data sets — essentially creating neural-network models — for machine-learning applications. The massively parallel architecture of GPUs make them particularly suitable for AI training.

The selling point for the HGX-2 is that it can be configured for both AI training as well as inferencing: actually putting the neural networks to use in real-life situations. The HGX-2 also targets HPC applications for scientific computing, image and video rendering, and simulations.

“We believe the future of computing requires a unified platform,” said Paresh Kharya, group product marketing manager for AI and accelerated computing at Nvidia. “What’s really unique about HGX-2 is its multi-precision computing capabilities.”

Kharya said the platform allows for high-precision calculations using up to FP64 (64-bit or double-precision floating point arithmetic) for scientific computing and simulations, while also offering FP16 (16-bit or half-precision floating point arithmetic) and Int8 (8-bit integer arithmetic) for AI workloads.

Each HGX-2 baseboard hosts six NVSwitches that are fully non-blocking switches with 18 ports, such that each port can communicate with any other port at full NVLink speed, Nvidia said. NVlink is Nvidia’s own interconnect technology, which has already been licensed by IBM.

The two baseboards in each HGX-2 platform communicate via 48 NVLink ports. The topology lets all 16 GPUs (eight on each baseboard) communicate with any other GPU simultaneously at full NVLink speeds of 300GB per second, Nvidia said.

“We’re breaking a lot of classic boundaries with this system,” said Kharya. “We’re pushing the limits on what a single system can do on 10 kilowatts of power.”

Nvidia also announced that it is offering eight classes of GPU-accelerated server platforms, each using dual Xeon processors for CPUs, but with different GPU core counts and configured differently for various AI and HPC needs. At the high end, Nvidia’s HGX-T2 is based on the HGX-2, with 16 Tesla V100 GPUs and tuned for training giant multilevel machine-learning neural networks. At the low end, Nvidia is offering the SCX-E1, with two Tesla V100 GPUs, which incorporates PCIE interconnect technology; this systems draws 1,200 watts and is aimed at entry-level HPC computing.

In Nvidia nomenclature, HGX-T systems are for AI training, HGX-I systems are for AI inferencing, and SCX systems are for HPC and scientific computing.

Nvidia has a firm grip on the market for GPUs aimed at AI workloads, but is destined to face increasing competition. Intel bought deep-learning start-up Nervana Systems in 2016 and is now finishing work on what it calls the Intel Nervana Neural Network Processor (NNP). In addition, FPGA makers like Xylinx are offering ever-more powerful FPGAs (field programmable gate arrays) that are being snapped up for AI inferencing.

While FPGAs lack the brute performance power to compete with GPUs for AI training, they can be programmed to process each level of a neural network, once it’s built, with the least precision suitable for that layer — a flexibility ideal for inferencing.

IDG News Service

Subscribers 0

Fans 0

Followers 0

Followers