“Cybersecurity professionals need real-time big data analytics to provide a comprehensive and contextually intelligent view of all security data to enable rapid detection and response to advanced security threats,” said Jamie Engesser, vice president and general manager of emerging products, Hortonworks. “Traditional security tools with a rules-based approach do not scale to match the speed and frequency of modern cybersecurity threats, and that is why we are so excited about the Apache Metron community’s momentum aimed at tackling this issue for the enterprise.”

HDP

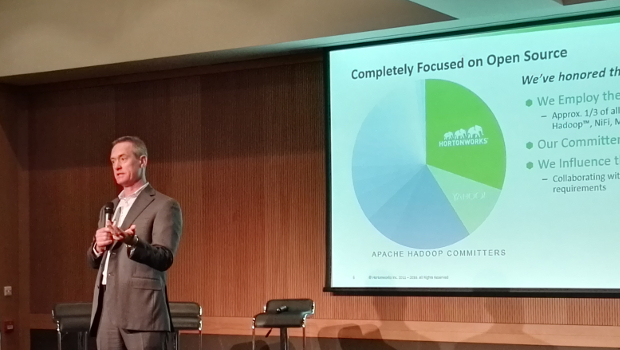

HortonWorks also announced several key updates to its Data Platform (HDP). HDP allows users to accumulate, analyse and act on information derived from data.

This is fulfilment, it said, of a recently announced new distribution strategy to deliver rapid innovations from the Apache Hadoop community to customers with this latest Extended Services release.

Apache Ranger for security and Apache Atlas for data governance are integrated, for the first time said HortonWorks, to empower users that define and implement dynamic classification-based security policies. Available in technical preview, enterprises can use Atlas to classify and assign metadata tags, which are then enforced through Ranger to enable various access policies. In addition, Atlas now provides cross-component lineage, delivering a more extensive view of data movement across multiple components.

Automated cloud provisioning

Another announcement was around automated provisioning for Hadoop in any cloud. Cloudbreak allows enterprises to simplify and automate the provisioning of clusters in the cloud and fine tune their use of cloud resources. Cloudbreak version 1.2 is now available and expands support for OpenStack for private cloud and Windows Azure Storage Blob (WASB) for Microsoft Azure, and comes with the ability to run scripts either prior to or after cluster provisioning.

Cluster operations have been simplified with the upcoming release of Apache Ambari, which features pre-built dashboards for HDFS, YARN, Hive, and HBase with key performance indicators for cluster health. The collective knowledge of support and engineering teams has captured nearly a decade’s worth of operational best practices, said the company, to help customers improve troubleshooting and speed time to resolution when issues occur.

Zeppelin exploration

Apache Zeppelin is a browser-based user interface that provides a notebook-style capability for analysts and data scientists to interactively explore their data and perform sophisticated data analytics. This final technical preview provides customers with an agile analytics user experience for Apache Spark running on a secure Hadoop cluster

“Hortonworks continues to deliver on its vision of building trusted governance and enterprise security for Hadoop,” said Tim Hall, vice president of product management, Hortonworks. “This milestone, and the additional cloud, operations and data science advancements in HDP, allow customers to move at the speed of their data. We are matching the pace of innovation occurring across Apache project teams in the community and delivering the latest innovations to our customers in a timely manner.”

TechCentral Reporters

Subscribers 0

Fans 0

Followers 0

Followers