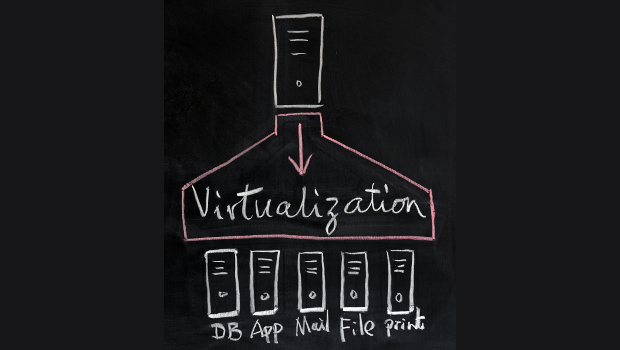

The promise of virtualisation to take away the headaches of old style hardware limitations has long echoed down the halls of every IT department. Consolidation, utilisation, efficiencies, availability, resilience, flexibility and security were all words bandied about, and indeed, virtualisation is capable of delivering under all of these, but only if it is done properly.

A key issue with new technologies, and virtualisation in particular, is that people tend to revert to old usage styles, despite new capabilities. So whether its server virtualisation, software defined networking or storage, too often a single feature, or narrow set thereof, is the initial win for IT, who then revert to using the new infrastructure like the old one-app, one-server environment.

“Viruses and malware have adapted to virtual environments, and not only can some infect a VM before it can load its own antivirus measures, some can jump from VM to VM and even infect the underlying hypervisor”

This often leads to issues, in terms of failure to show return on investment or expected performance gains, but a recent survey shows that the situation might be even worse.

According to a survey of 5,500 companies internationally by Kaspersky Labs, when a security incident involves virtualised infrastructure, the cost can as much as double. This applies whether the virtualised environment is part of a public or private cloud, the survey found.

Kaspersky Labs found that enterprises paid an average of $800,000 (€692,188) to recover from a security breach involving virtualisation, compared to $400,000 (€346,094) in traditional environments. Small and midsized businesses, meanwhile, saw costs rise from an average of $26,000 (€22,495) to $60,000 (€51,910) with virtualisation.

That is quite staggering, but alas, when looking into the analyses, the story is all too familiar.

According to Andrey Pozhogin, senior product marketing manager, Kaspersky Lab, there were three mains reasons for this, all of which are avoidable.

The survey showed that many IT pros assumed that virtual servers were naturally more secure than traditional metal, and especially so when it came to viruses, with some 42% expressing the sentiment. This is despite the fact that viruses and malware have adapted to virtual environments, and Pozhogin said that not only can some viruses infect a VM before it can load its own antivirus measures, some can jump from VM to VM and even infect the underlying hypervisor.

This is compounded by the fact that just over a quarter (27%) reported using security packages that were not specifically design for virtualised environments.

Going beyond security, assumptions were also made about disaster recovery, which is often presumed to come free with a virtual environment. Not so, says Pozhogin.

Because the initial cost of setting up virtualised environments can be high, and labour intensive, Pozhogin said that consequently organisations often postpone disaster recovery and fault tolerance measures till later, and these often end up unimplemented. However, organisations often find that it can be more costly and time consuming than expected to re-establish the virtual environments in which the critical VMs run, further adding to delays and bills, as the survey found.

Another reason contributing to the higher costs is the fact that so many organisations are now running mission critical workloads in virtualised environments. This means that when there is a problem, it often results in a critical process link being broken, often bringing down the business. Two thirds of survey respondents said that they lost access to business critical information during an incident involving virtualisation. This is compared with just 36% in a traditional environment.

With stories about VM sprawl and unexpected cascade failures in virtual environments, it seems many organisations are simply carry their bad habits over into the virtual world, almost ensuring expensive problems down the line.

The old adage of never outsource a problem seems to be particularly salient here, and can be adapted for the virtual world — don’t virtualise a bodge, and certainly don’t virtualise anything that is less than best practice.

Pozhogin goes further and advises organisations to ensure that their security measures are up to the task and are designed with virtual environments in mind. Disaster recovery to needs to be clearly defined and adapted to the environment, not just assumed due to the inherent capabilities of virtualised infrastructure. He warns that both security and disaster recovery need to be included as key points at the outset of any architecture, not bolt-ons afterward. As this refrain is now being chanted among application developers too, one wonders why so much wisdom seems to have evaporated when moving to the virtual, or arguably the mobile, world.

Critical lessons and good practice seem to be lost amid the rush to virtualise, and it does not bode well for the uptake of hybrid and public cloud too. If organisations continue to ignore these issue as they move from on-premises virtual environments to public infrastructure the implication is the poor practices and assumptions being carried with them will leave them vulnerable.

The fact that the potential costs are so much more, not a small proportion but potentially double, should be a wake-up call for organisations to take this seriously. This is compounded by the recent pronouncements of an EMC VP, Randy Bias, who warned that vendor rhetoric around the ease of deployment of open source cloud stack technologies on whitebox infrastructure is foggy at best and such projects end up being more costly than expected. This is often down to the complexity of the architectures, difficulty in deployment and a lack of skills. And this is from an OpenStack advocate!

It is often remarked that in Ireland, we are 6-12 months behind the curve in terms of adopting new technologies. Many cite this as a boon, as Irish organisations can learn from the mistakes of early adopters. Well a key point from both of these stories seems to be that organisations at the moment are failing to heed lessons of the past, as well as failing to grasp key requirements before embarking on virtualisation and cloud projects. We would do well to take heed of both.

Subscribers 0

Fans 0

Followers 0

Followers